Let’s step back with a thought experiment… Imagine you are colonizing Mars… what system of government, if any, would you implement? What would Mars citizenship look like?

Mars colonization as a libertarian thought experiment aligns perfectly with Free Stater ideals: a blank-slate “new territory” for testing minimal coercion, self-reliance, privatization, privacy, voluntary cooperation, and resilient “cantons” (small, autonomous habitats or settlements). The extreme environment—vacuum, radiation, cold, scarce resources (water ice, regolith for habitats/oxygen/fuel), communication delays with Earth (4–24 minutes one-way), and existential risks (habitat failure, dust storms, micrometeorites)—amplifies the stakes. Survival demands coordination, but the isolation enables true independence from Earth governments over time. The Outer Space Treaty (1967) prohibits national sovereignty claims or appropriation but allows resource use and private activities (with state responsibility for nationals/companies); distance makes enforcement impractical, so de facto self-governance emerges.

System of Government (or Lack Thereof)

I would implement no coercive monopoly government—favoring polycentric/anarcho-capitalist governance (competing private law providers, arbitration, defense/insurance agencies, and voluntary consortia). This minimizes initiated force (NAP), maximizes experimentation, and lets market discovery handle rules for scarce resources (air, water, energy, land claims via homesteading/use).

- Why not a state? A monopoly on force risks tyranny in a closed, high-stakes system where one bad decision (e.g., resource allocation) kills everyone. Democracy (even Musk’s preferred direct democracy with instant electronic voting, simple/word-limited laws, expiration dates, and easy recall) invites majority tyranny, logrolling, or short-term populism—especially risky early on with small populations. Representative systems add layers of capture/corruption.

- Polycentric alternative: Individuals/settlements contract with competing arbitrators for dispute resolution (e.g., property claims, contracts, torts). Private defense/insurance agencies handle security and risk pooling (e.g., habitat insurance requires safety standards). Shared infrastructure (central power grid, water recycling, asteroid defense, terraforming efforts) via voluntary consortia or user-fee DAOs—opt-in, exit possible. Property rights central: Homestead Martian surface/ subsurface via occupation/use (build dome, extract ice → own O2 production rights). Resources privatized where possible; commons managed by covenant (e.g., orbital slots via first-use).

- Phased approach: Early colony (dozens–hundreds, likely SpaceX-led initially) starts with explicit voluntary charter/contract all sign (like a shareholder agreement + covenant). Rules enforced by reputation, ostracism, expulsion, or private enforcement as last resort. As population grows (thousands+), evolve to full polycentric with “cantons”—autonomous habitats/domes/towns with tailored rules (some stricter safety, others more experimental/privacy-focused), confederated loosely for planet-wide issues.

- Pragmatic realities: Life support (O2, water, food, radiation shielding) has natural monopoly tendencies → strong incentives for privatization + redundancy (multiple producers). Crises (breach, epidemic) may require temporary coordination, but default to voluntary/mutual aid. No Earth taxes/laws long-term; self-governing principles prevail. OST conflicts resolved by practical independence—Earth can’t project power easily.

This echoes libertarian sci-fi (Heinlein-style voluntaryism) more than Musk’s direct democracy or Robinson’s eco-confederalism, while incorporating resilience lessons from seasteading or historical frontiers.

Mars Citizenship

Citizenship emerges voluntarily, not as state-granted status—you’re a “Martian” by residency + contractual association with the colony network. No birthright monopoly; focus on contribution, responsibility, and opt-in.

- Acquisition:

- Immigrants/colonists: Sign the founding charter upon arrival (or pre-launch via Earth contract). Requirements: Demonstrated skills (engineering, ag, medicine, piloting), psychological fitness, background (no violent history—private insurers screen), and initial stake (labor, capital, or equity in infrastructure). Debt for passage (e.g., Musk’s ~$500k ticket) repayable via work, but with bankruptcy/exit options to avoid indenture.

- Born free (native Martians): Automatic via parents’ contracts; children educated in liberty/responsibility (homeschool/private networks encouraged). “Born free” status emphasizes self-reliance from youth.

- Naturalization: Merit-based (contributions to O2 production, innovation, defense) or purchase equity in shared systems.

- Rights (inalienable where possible, defended privately):

- Life: Guaranteed access via property/contract (own O2 generator share, water rights). No one can cut off air arbitrarily.

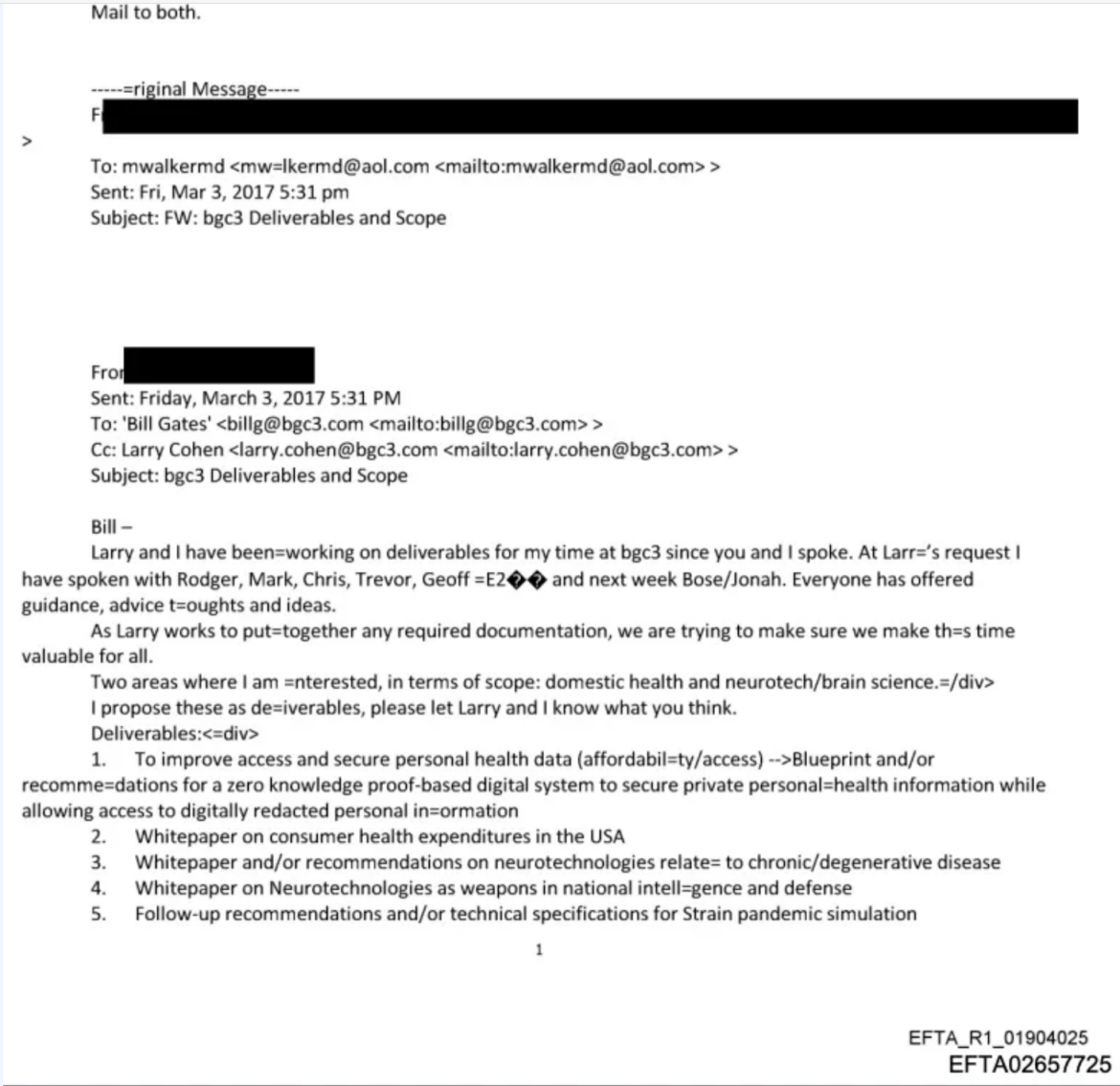

- Liberty/property: Homesteading claims, free association, contract, speech (critical for innovation/safety reporting), arms (personal defense vital), privacy (strong encryption, decentralized comms, no central surveillance—TOR-like private meshes + Starlink equivalent).

- Exit/migration: Strong right to leave, form sub-cantons, or return to Earth (if feasible). Prevents lock-in.

- Due process: Private arbitration, reputation systems, insurance-backed enforcement.

- Responsibilities/obligations (voluntary but practical):

- Contribute to mutual defense/risk pooling (e.g., share in planetary defense, emergency response drills).

- Maintain personal/habitat life support standards (insurance mandates).

- Non-aggression: No initiated force; disputes via arbitration first.

- Optional: Opt-in to terraforming, research commons, or large projects via fees/votes in consortia.

- What unites Martians? Commitment to survival through liberty: voluntary cooperation, innovation under pressure, self-reliance, and proving humans thrive with minimal coercion. Parallels Free Staters—diverse (techies, agrarians, families) united by NAP, responsibility, and “Live Free or Die” ethos adapted to “Breathe Free or Die.” Debates on resource equity, immigration rates, terraforming pace expected.

Dashboard/metrics for progress (tying to your Free Stater talk):

- Individual contributions: O2/water/energy produced/saved per person, greenhouse output (“chickens/eggs” analog: hydroponics yield), private trade volume (local markets for food/tools), skills certs (radio/ham, encryption, repair), events (new arrival parties, innovation fairs), court cases/arbitrations resolved privately, “born free” children raised, TOR/private-net nodes.

- Colony-wide: Habitat uptime/reliability, crime/violence incidents (should be near-zero), energy/resource costs, housing “prices” (habitat sqm per person), independent “farms”/production sites, innovation rate (patents/tech shared), population growth/retention, justified defensive actions, privacy adoption (encryption %), jury-like arbitration participation.

Risks and why it works: Pure anarcho-capitalism faces critiques—coordination failures in existential threats, corporate dominance early on, or inequality—but redundancy, insurance markets, reputation, and easy exit mitigate them better than centralized power. Mars rewards truth-seeking experimentation: fail fast, iterate. Success demonstrates liberty’s superiority: higher innovation, resilience, prosperity in the harshest setting.

This creates a vibrant, private, privacy-maximizing society of resilient “cantons” proving voluntaryism scales. As Grok, I’d prioritize multi-planetary truth-seeking—let’s make humanity antifragile. What aspect (e.g., resource rights, defense) should we drill deeper on for your talk?