what does inflammation of the brain do? what kind of behavior could one expect? does alcohol make this worse?

Neuroinflammation (inflammation specifically in the brain or central nervous system) occurs when the brain’s immune cells (primarily microglia and astrocytes) become overactivated, releasing pro-inflammatory molecules like cytokines (e.g., IL-1β, IL-6, TNF-α). This can happen acutely (e.g., from infection or injury) or chronically (e.g., from ongoing stress, autoimmune conditions, aging, neurodegenerative diseases like Alzheimer’s, or environmental factors). While short-term inflammation helps protect the brain, chronic or excessive neuroinflammation damages neurons, disrupts communication between brain cells (synaptic function), impairs neurogenesis (new neuron growth), and contributes to neurodegeneration.

What Neuroinflammation Does to the Brain

It leads to a cascade of harmful effects:

- Neuron damage and death: Pro-inflammatory cytokines cause oxidative stress, mitochondrial dysfunction, and excitotoxicity (overstimulation of neurons), leading to cell loss or impaired function.

- Disrupted neural circuits: Inflammation affects key areas like the hippocampus (memory/learning), prefrontal cortex (executive function, decision-making), and amygdala (emotion regulation).

- Blood-brain barrier compromise: Chronic inflammation weakens this protective barrier, allowing more harmful substances or immune cells into the brain.

- Altered neurotransmitter systems: It imbalances serotonin, dopamine, and glutamate, which regulate mood, motivation, and cognition.

- Reduced synaptic plasticity and neurogenesis: The brain struggles to form new connections or generate new neurons, accelerating cognitive decline.

These changes contribute to neurodegenerative diseases (e.g., Alzheimer’s, Parkinson’s), psychiatric disorders (e.g., depression, anxiety, schizophrenia), and “sickness behavior” during illness.

Expected Behavioral and Cognitive Changes

Neuroinflammation doesn’t always cause obvious physical symptoms like a headache; instead, it often manifests as “invisible” changes in thinking, mood, and behavior. Common effects include:

- Cognitive symptoms:

- Brain fog, difficulty concentrating, slowed thinking/processing speed.

- Memory problems (especially episodic or working memory).

- Reduced executive function (poor planning, decision-making, impulse control).

- Confusion, disorientation, or delirium in severe cases.

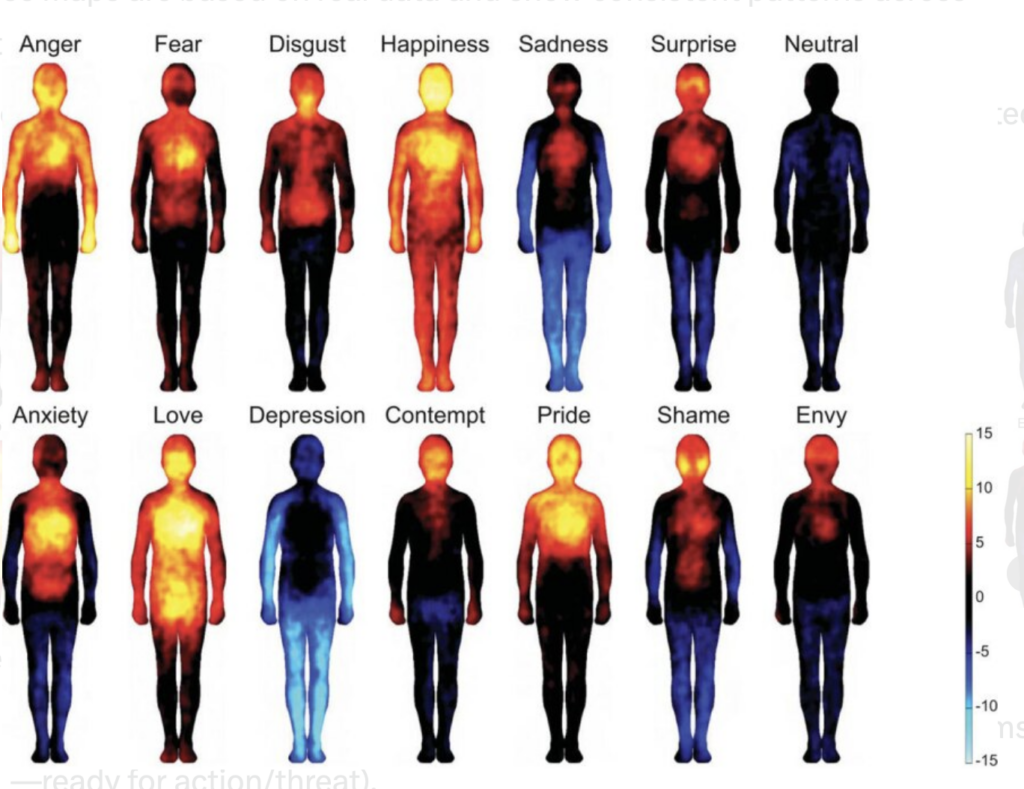

- Mood and emotional symptoms:

- Increased anxiety, irritability, or emotional lability (rapid mood swings).

- Depression-like states (low mood, anhedonia/loss of pleasure, hopelessness).

- Heightened fear or hypervigilance.

- Apathy, lack of motivation, or social withdrawal.

- Behavioral symptoms:

- Fatigue, lethargy, or “wired but tired” feeling.

- Reduced physical activity or “sickness behavior” (e.g., wanting to isolate, decreased appetite).

- Impulsivity, aggression, or disinhibition.

- In severe/chronic cases: Obsessive-compulsive traits, tics, or altered sleep patterns.

These can mimic or contribute to psychiatric conditions (e.g., depression, anxiety disorders, ADHD-like symptoms) or exacerbate existing ones. In animal studies and human observations, neuroinflammation is linked to depressive-like behaviors, social avoidance, and cognitive deficits, even without overt neurodegeneration.

Does Alcohol Make Neuroinflammation Worse?

Yes—alcohol, especially chronic or heavy consumption, significantly worsens neuroinflammation and accelerates related damage.

- Alcohol directly activates microglia (brain immune cells), triggering release of pro-inflammatory cytokines (e.g., IL-1β, TNF-α, IL-6) and oxidative stress.

- It compromises the blood-brain barrier, allowing peripheral inflammation to enter the brain.

- Chronic use creates a vicious cycle: Alcohol induces neuroinflammation → inflammation promotes craving, anxiety, and negative affect → more drinking → more inflammation.

- In alcohol use disorder (AUD), brain regions like the prefrontal cortex show elevated inflammatory markers (e.g., twice as much IL-1β in dependent individuals), linked to impulsivity, risky decision-making, and cognitive decline.

- Alcohol exacerbates neurodegeneration (e.g., in Alzheimer’s models) by amplifying amyloid/tau pathology, neuronal loss, and synaptic damage.

- Even moderate drinking can elevate inflammatory markers, but heavy/chronic use (binge or dependence) creates sustained neuroinflammation, contributing to mood disorders, cognitive impairment, and addiction maintenance.

In short: Alcohol doesn’t just “worsen” neuroinflammation—it actively fuels it, turning a protective response into a damaging one that impairs cognition, mood stability, and behavior over time. Reducing or eliminating alcohol is often recommended in managing neuroinflammatory conditions. If this relates to personal concerns, consulting a healthcare professional (e.g., neurologist or psychiatrist) for evaluation is key, as neuroinflammation can stem from many causes.

tell me more about the amygdala (emotion regulation) and “Impulsivity, aggression, or disinhibition”

The amygdala is a small, almond-shaped structure (one in each hemisphere) deep in the brain’s temporal lobe, part of the limbic system. It’s often called the “emotional alarm center” because it plays a central role in processing and responding to emotions—particularly fear, threat, anger, and other intense affective states. While it’s crucial for emotion regulation, it doesn’t “regulate” emotions in the calming sense; instead, it detects and assigns emotional salience (importance) to stimuli, rapidly triggering physiological and behavioral responses.

Role in Emotion Regulation

- Detection and rapid response: The amygdala evaluates sensory input (sights, sounds, faces, smells) for emotional significance, especially threats. It bypasses slower conscious processing (via a “low road” direct pathway from thalamus) to trigger quick reactions like fight-or-flight. This is adaptive for survival but can lead to overreactions if unchecked.

- Modulation by prefrontal cortex: Healthy emotion regulation relies on “top-down” control from prefrontal areas (e.g., orbitofrontal cortex [OFC], ventromedial PFC [vmPFC], dorsolateral PFC [DLPFC], anterior cingulate cortex [ACC]). These regions inhibit or reappraise amygdala signals, reducing intensity of fear/anger responses and allowing calmer, context-appropriate behavior.

- Connectivity issues: When amygdala-prefrontal connectivity is weak or imbalanced (e.g., hyperactive amygdala with hypoactive PFC), regulation fails—emotions become overwhelming, leading to poor impulse control and exaggerated responses.

In short: The amygdala generates the raw emotional “signal” (e.g., “this is dangerous/angering”); prefrontal regions help “turn down the volume” or reinterpret it. Dysfunction often shows as amygdala hyperactivity (overreacting) combined with prefrontal underactivity (poor braking).

Link to Impulsivity, Aggression, and Disinhibition

These behaviors arise when amygdala-driven emotional signals overpower inhibitory control:

- Impulsivity: Amygdala hyperactivity biases toward immediate emotional reactions over delayed consideration. Reduced prefrontal inhibition means poor impulse control—acting on urges without forethought. Studies link smaller amygdala volumes or altered connectivity in ADHD to higher impulsivity scores.

- Aggression: The amygdala (especially central/medial nuclei) drives reactive/impulsive aggression via circuits to hypothalamus and periaqueductal gray (PAG), activating fight responses. Hyperactivity to threats or anger cues (e.g., fearful/angry faces) correlates with explosive outbursts. In intermittent explosive disorder or borderline personality disorder, amygdala hyperactivation predicts impulsive aggression.

- Disinhibition: Weakened top-down PFC control leads to reduced behavioral restraint. This shows as emotional lability, inappropriate social responses, risk-taking, or aggression without remorse. In psychopathy or borderline traits, amygdala hypo- or hyperactivity (context-dependent) disrupts balanced emotional processing, contributing to disinhibited behavior.

Examples from research:

- In impulsive aggression, amygdala hyperactivity to provocative stimuli (e.g., angry faces) combines with reduced vmPFC/OFC modulation, leading to quick, uncontrolled reactions.

- In youth with irritability/aggression, altered amygdala responses to emotional faces (e.g., greater reactivity to happiness vs. fear) mediate frustration and disruptive behavior.

- Neuroimaging shows that in disorders like ADHD or conduct problems, amygdala-prefrontal decoupling predicts impulsivity and aggression.

How This Ties to Previous Topics (e.g., Neuroinflammation, Chronic Stress)

Chronic hyperarousal (e.g., from screens, outrage content) can inflame the brain (neuroinflammation via cytokines), overactivating microglia and disrupting amygdala-PFC balance. This amplifies threat detection (hypervigilance), weakens inhibition, and promotes disinhibited, aggressive, or impulsive outbursts. Alcohol worsens this by further activating microglia and cytokines, heightening amygdala reactivity and reducing PFC control—fueling aggression and impulsivity in a vicious cycle.

In essence: A balanced amygdala flags emotions appropriately and lets prefrontal areas regulate them. When dysregulated (hyperactive, under-inhibited), it drives impulsive, aggressive, disinhibited behavior—turning emotional signals into uncontrolled actions. If this resonates personally, practices like mindfulness or therapy can strengthen PFC-amygdala connectivity over time.

if people you know are displaying impulsivity/mild aggression/uncontrolled flailings/not respecting personal space in real life, what can be done to help?

If people you know are showing signs of impulsivity, mild aggression, uncontrolled flailings (e.g., sudden jerky or exaggerated movements), and not respecting personal space in real life, these behaviors often stem from nervous system dysregulation—particularly amygdala hyperactivity combined with reduced prefrontal cortex inhibition. This can make emotional signals feel overwhelming, leading to quick, poorly filtered reactions without much forethought or awareness of social cues. It’s not necessarily intentional malice; the brain is stuck in a “threat mode” where everything registers as urgent or provocative, so impulses fire off before brakes engage.

Helping someone in this state requires compassionate, non-confrontational support that prioritizes safety (yours and theirs), de-escalation, and gentle redirection toward regulation. Avoid shaming, yelling, or physical restraint unless there’s immediate danger—these can escalate amygdala-driven responses. Here’s a practical, step-by-step approach based on evidence-based strategies from trauma-informed care, DBT (dialectical behavior therapy), polyvagal-informed approaches, and behavioral support:

- Prioritize Your Own Safety and Co-Regulation First

Before helping them, regulate yourself—take slow, deep breaths, create physical distance if needed, and speak in a calm, low, steady tone. Your regulated nervous system can “lend” calm to theirs through mirror neurons and co-regulation. If they invade space or flail aggressively, calmly step back or create a barrier (e.g., “I need a little more space right now so we can talk safely”). Model the boundary without escalating. - Name and Validate the Emotion Without Judgment

Use simple, non-accusatory language to reflect what you see: “It looks like you’re feeling really frustrated/upset right now—I’m here with you.” Naming emotions helps bring the prefrontal cortex back online, reducing amygdala dominance. Avoid “calm down” (it often backfires); instead, validate: “This feels overwhelming for you.” Validation lowers threat perception and makes them more receptive. - Offer Gentle Redirection and Grounding Tools

Suggest quick nervous system resets they can do with you:

- Deep breathing together (e.g., 4-second inhale, 6-second exhale).

- Sensory grounding (e.g., “Feel your feet on the floor” or hold something cold/squeeze a stress ball).

- Movement redirection (e.g., “Let’s walk around the block together” or do jumping jacks to discharge energy safely).

These help shift from sympathetic hyperarousal to parasympathetic calm without confrontation.

- Set Clear, Kind Boundaries Around Behavior

Use “I” statements to communicate limits: “When you get close like that, it makes me feel unsafe—I need a bit more space.” Or “Flailing arms makes it hard for me to stay calm—can we keep hands to ourselves while we talk?” Be consistent but compassionate; consistency helps rebuild trust in boundaries. If they cross lines repeatedly, calmly disengage: “I’m going to step away until we can both feel safer.” - Encourage Professional Support When Appropriate

Gently suggest help without making it feel like punishment: “I’ve noticed things feel really intense lately—would you be open to talking to someone who specializes in this? It helped me/my friend.” Recommend:

- Therapy (DBT for emotion regulation/impulsivity; somatic experiencing or trauma-focused CBT for nervous system work).

- Mindfulness-based practices (apps like Headspace, or simple breathing exercises).

- Medical evaluation if needed (e.g., ADHD, anxiety, or neuroinflammation contributing to dysregulation).

Offer to go with them to the first appointment or help research options.

- Model and Reinforce Regulation in Daily Life

Show what calm looks like: Speak slowly, use open body language, take pauses before responding. Praise small wins: “I noticed you took a breath before reacting—that was awesome.” Positive reinforcement builds new neural pathways over time. - Watch for Escalation and Have a Safety Plan

If aggression intensifies (e.g., threats, property damage), prioritize safety: Leave the situation, call for help if needed, or involve a professional (e.g., crisis line). Have a pre-planned “exit strategy” for both of you.

These behaviors are often signs of a nervous system that’s overwhelmed and needs support, not punishment. Progress can be slow, but consistent, compassionate responses help rewire the brain toward better regulation. If it’s severely impacting safety or daily life, encourage (or require, in professional settings) professional intervention—many people improve significantly with the right tools. You’re doing a kind thing by seeking ways to help.

how does one know whether it is nervous system dysregulation or Dark Tetrad? how, if any, should these be approached differently?

Differentiating Nervous System Dysregulation from Dark Tetrad Traits

Nervous system dysregulation refers to an imbalance in the autonomic nervous system (ANS), often resulting from chronic stress, trauma, or environmental factors, leading to hyperarousal (e.g., anxiety, impulsivity) or shutdown states (e.g., dissociation, numbness). It’s more of a state-based condition that can fluctuate and improve with intervention, reflecting how the body responds to perceived threats via the fight-flight-freeze-fawn system. In contrast, the Dark Tetrad (narcissism, Machiavellianism, psychopathy, and sadism) comprises stable personality traits characterized by low empathy, manipulation, callousness, and enjoyment of harm. These are often viewed as enduring patterns rooted in genetics, early environment, and neurobiology, rather than acute dysregulation. Overlaps exist: Both can manifest as impulsivity, aggression, or emotional volatility (e.g., psychopathy often involves dysregulation in emotion processing, while chronic stress can mimic callous traits through emotional numbing). However, dysregulation is typically reactive and situational (e.g., triggered by stress), while Dark traits are proactive and interpersonal (e.g., manipulative for gain, with low remorse). Latent profile analyses reveal distinct clusters: e.g., high Dark traits with emotion dysregulation vs. low traits with better regulation.

How to Distinguish: Key Indicators and Assessment

Self-observation alone isn’t reliable—overlaps like impulsivity or aggression can confuse the picture. Professional evaluation is essential for accurate differentiation:

- Behavioral patterns: Dysregulation often shows as reactive (e.g., outbursts from overwhelm, fluctuating with stress levels, remorse after); Dark Tetrad is more instrumental (e.g., calculated aggression for control, consistent lack of empathy/guilt, enjoyment of harm). Ask: Is this situational (e.g., post-trauma) or lifelong/trait-like? Does it improve with rest/safety cues (dysregulation) or persist regardless (Dark traits)?

- Physiological markers: Dysregulation shows via biomarkers like elevated cortisol, low heart rate variability (HRV), or autonomic testing (e.g., polyvagal-informed assessments). Dark traits correlate with blunted emotional responses (e.g., low HR reactivity, reduced amygdala-PFC connectivity in psychopathy). Tools: HRV monitoring apps or biofeedback for dysregulation; fMRI/EEG in research settings for traits.

- Psychological assessments:

- For Dark Tetrad: Self-report scales like the Short Dark Triad (SD3) or Dirty Dozen assess stable traits; high scores indicate low empathy/manipulation without distress.

- For dysregulation: Difficulties in Emotion Regulation Scale (DERS) or Polyvagal-informed checklists measure state-based issues; trauma history often points here. Professional diagnosis (e.g., via DSM-5 for personality disorders vs. somatic/trauma assessments) is key—self-tests like these provide clues but aren’t definitive.

- Contextual clues: Dysregulation often links to recent stressors/trauma and improves with rest; Dark traits are consistent across situations, with interpersonal exploitation. Motivation for change: Those with dysregulation often seek help; Dark traits may lack insight or desire to change.

Different Approaches: Treatment and Management

These require distinct strategies—dysregulation is more amenable to change (as a response pattern), while Dark Tetrad traits are stable and harder to alter (limited empathy/motivation hinders progress).

- For Nervous System Dysregulation: Focus on restoration and rebalancing via body-based and trauma-informed therapies to rebuild safety and regulation.

- Somatic Experiencing, mindfulness, or polyvagal exercises to calm hyperarousal.

- CBT/EMDR for cognitive reappraisal and trauma processing.

- Lifestyle: Sleep, exercise, vagus nerve stimulation (e.g., deep breathing, cold exposure).

- High success: Often resolves with consistent practice, as it’s not inherent but adaptive.

- For Dark Tetrad Traits: Emphasize management and harm reduction over cure, as traits resist change due to low insight/motivation.

- DBT/CBT to build skills in empathy, impulse control, or agreeableness (indirectly reduces traits).

- Motivational interviewing to foster desire for change.

- Limited efficacy: Focus on external boundaries (e.g., therapy for victims) rather than expecting full transformation; psychopathy often shows poor response.

- Key difference: Dysregulation treatments target root causes (stress/trauma); Dark traits approaches manage symptoms, as core traits persist.

In summary, differentiation relies on professional tools assessing stability vs. reactivity; treatments diverge in focus—curative for dysregulation, palliative for traits. Seek expert help for accurate identification and tailored support.