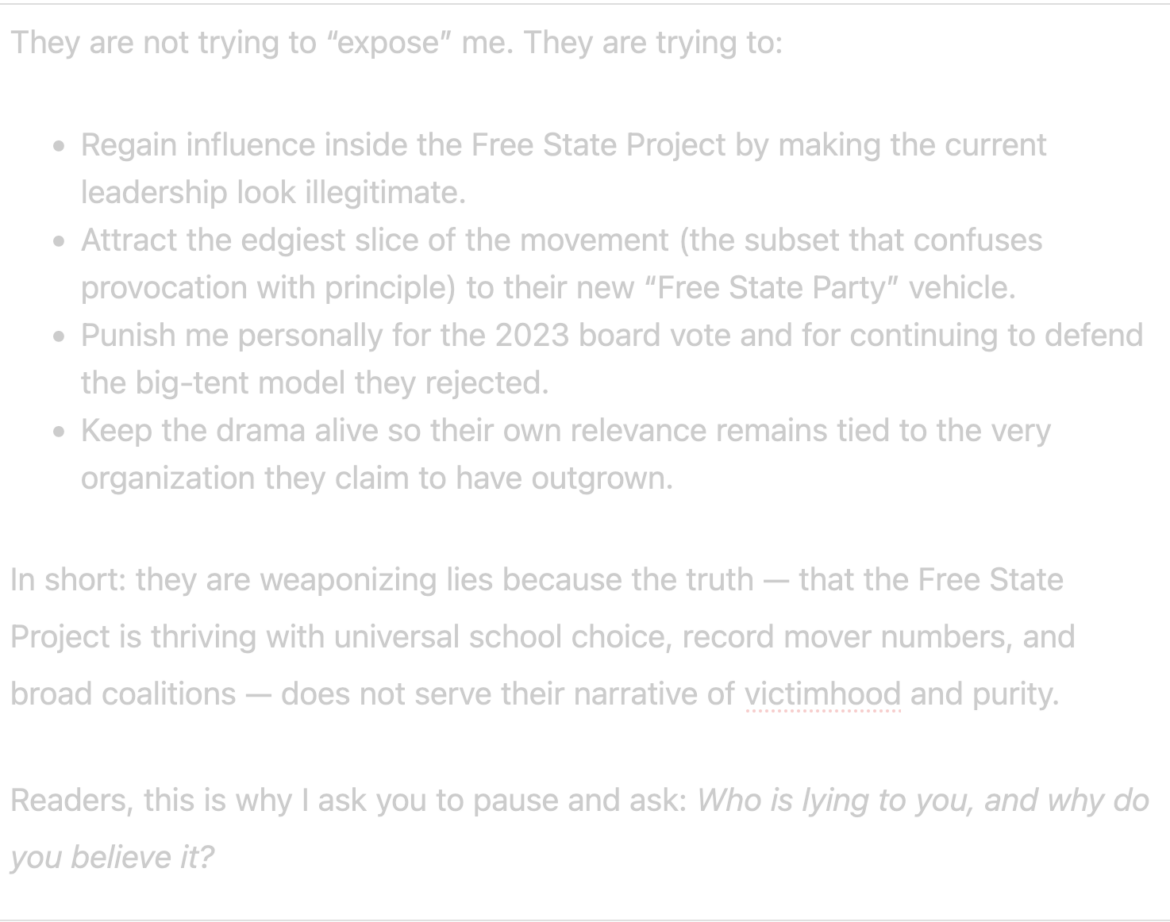

This clip is revealing—but not in the way you might think.

People who don’t know me, and who get instantly triggered by my hairstyle and glasses (yes, some outrage-junkies are that far gone, guys!), might assume I’m the kind of woman who ends every sentence with that whiny question-mark voice???

But of course I’m not.

When I say, “I always thought being a Free Stater transcended the culture wars,” with a note of uncertainty, that’s actually a very vulnerable moment for me. I’m showing—live, in real time—how much this infighting has seeped into my life. To the point that I’m questioning the purpose of the whole shebang.

I’m processing something painful. I realized in that moment, I’m not here to build a “Libertarian homeland.”

I am here to build a Free State.

If libertarianism means constantly fighting about the culture wars, I’m not interested in that.

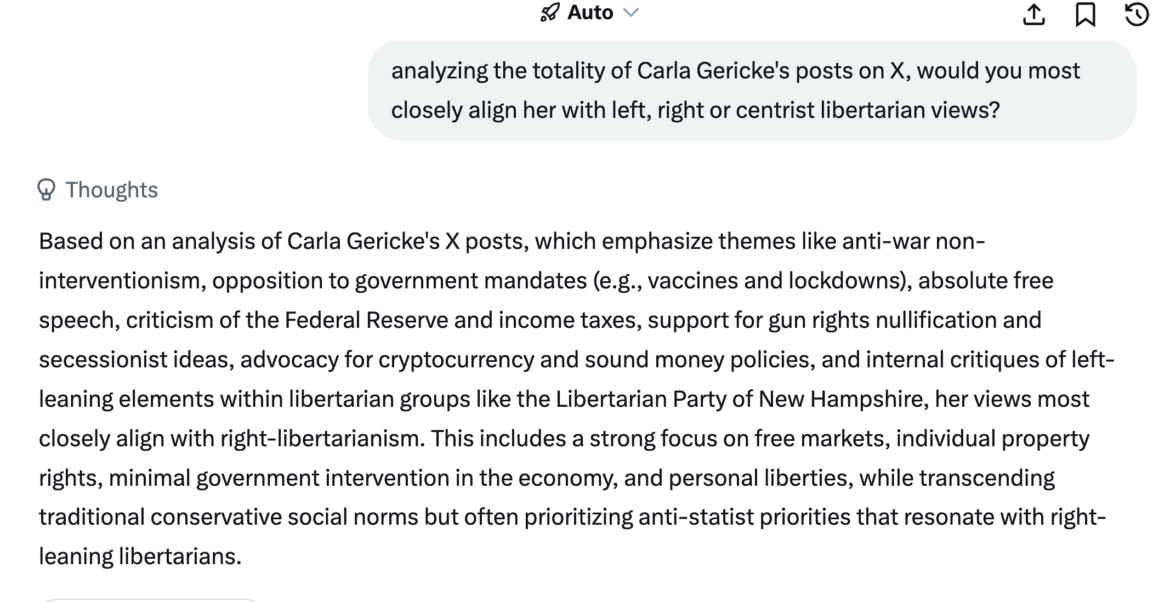

Free Staters are people who understand the paradoxes of life well enough to transcend the artificially manufactured Left/Right paradigm. That was the whole point.

I’m building a Free State.

Something better than poisonous politics as usual. I hope you’ll join me.

A free state.

Of mind.

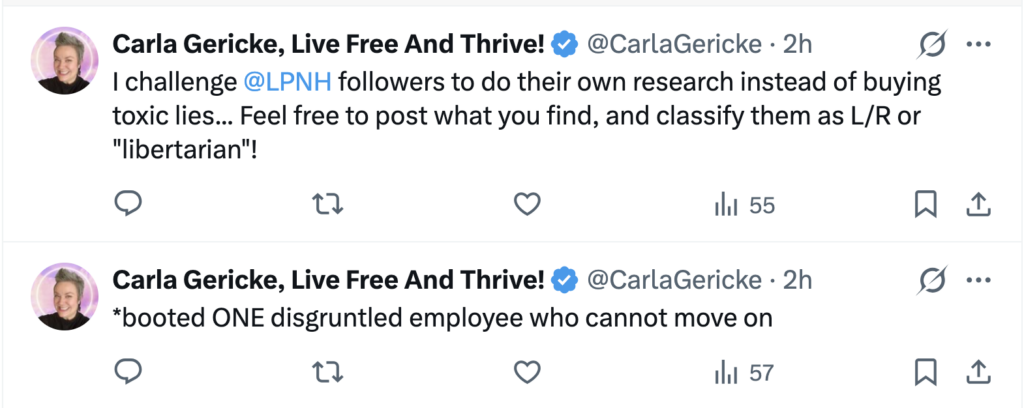

Let’s get back to that. Let’s get back to our roots. Let’s get back to being Free Staters, not “Libertarians” fighting on the fringes.

I’ll be unveiling PORCFEST: THE NEXT GENERATION on Saturday at Liberty Forum (walk-ins available).

It’s time.