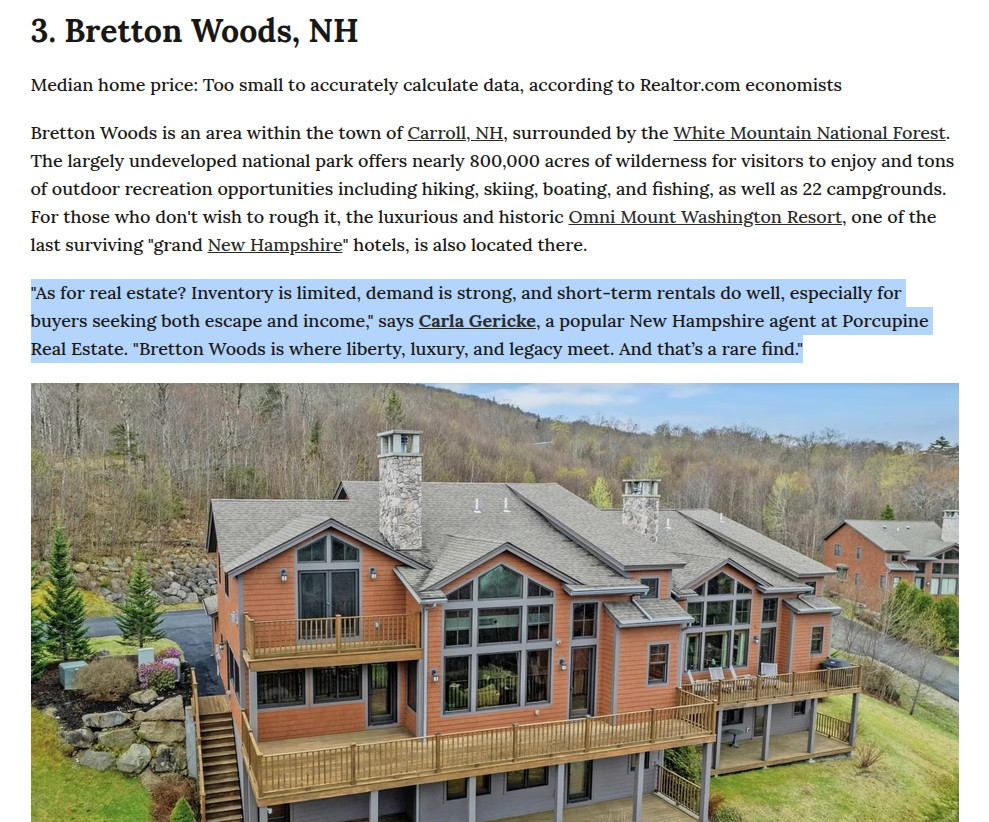

Day 202 of My Living Xperiment: The Pine; I whine; it's fine. https://t.co/ATniEk0XH0

— Carla Gericke, Live Free And Thrive! (@CarlaGericke) July 21, 2025

I was delighted to get a quick tour of the latest community center to open. I give you The Pine in downtown Lancaster. Get involved! https://t.co/5jZNFs2M1G pic.twitter.com/a1fAEpNMB4

— Carla Gericke, Live Free And Thrive! (@CarlaGericke) July 21, 2025